ChatGPT Agent Mode, and "Vibe Automations"

OpenAI will eat AI automations

Each month, this newsletter is read by over 45K+ operators, investors, and tech / product leaders and executives. If you found value in this newsletter, please consider supporting via subscribing or upgrading. This helps support independent AI analysis.

OpenAI just released a new agent (“Agent Mode”) in ChatGPT. Many are calling it “Deep Research meets Operator”, but I think that’s severely underselling the product.

It’s more useful to think of Agent Mode as a virtual employee armed with a MacBook: one you can spin up on-demand for less than $0.50.

And because you can schedule these agents to run repeatedly, we’re now entering the era of Vibe Automations: using ChatGPT to conversationally create multi-step, document workflows.

Multi-step means Agent Mode doesn’t just hand you a 30-page research report like Deep Research. It can turn that into a slide deck, repurpose the content into blog posts, upload the assets to Google Drive, and email everything to your team.

That extra bit means ChatGPT can take up more “last mile” knowledge work. The product still has some rough edges (we’ll get to that), but in terms of shaping the future of work, Agent Mode feels more disruptive than Deep Research.

Why? Here’s the dirty secret in vertical/domain-specific AI: most agents in 2025 are glorified multi-step macros for processing documents—filling forms, reviewing PDFs, generating reports, chaining document transformations, mutating call transcripts, etc.

And Agent Mode lowers the barrier dramatically, because it’s conversational and intentionally accessible. No knowledge of agentic prompting, MCPs, or n8n needed.

That’s why it could accelerate timelines for “vibe doing” most of office work, and why ChatGPT is positioned to absorb a significant chunk of the prosumer automation market. The line between prosumer and enterprise AI is going to blur fast.

In the rest of this post, I’ll break down:

what Agent Mode is, conceptually and architecturally

how Agent Mode expands the demand for AI automations as it unlocks a new, conversational experience for building them

thoughts on what AI automations won’t be eaten by ChatGPT, at least in the next 1 year horizon

strategic implications for startups, as well as key risks to OpenAI’s goal to disintermediating Microsoft and Google Workspaces

I’d love to connect with my readers and hear about any specific blockers you are facing in AI adoption. If you are a business and about to undergo a large AI project, I am offering free AI strategy audit (appointment link sent upon email based on review).

What is Agent Mode?

Agent Mode is a new ChatGPT feature that lets you spin up a virtual computer—running inside OpenAI’s servers—complete with a browser, terminal, file system, and integrations with Google Drive, SharePoint, and more. An AI agent operates within this virtual machine to autonomously perform complex, multi-step tasks.

In plain English: it’s like giving a smart assistant a Chrome Book, and letting it get to work. And you can spin up multiple of them, in parallel, for less than a buck.

Compared to DeepResearch, Codex, or Operator, the new Agent Mode is decisively general purpose, since it is given access to the full computer, which is a general purpose tool.

This generality opens up a lot of use cases, and imagination is your main bottleneck. The real magic comes from the Terminal tool, which Agent uses to call APIs, create files (remember, PPT is just a file after all), and practically anything in “cyberspace”.

Some fun use cases:

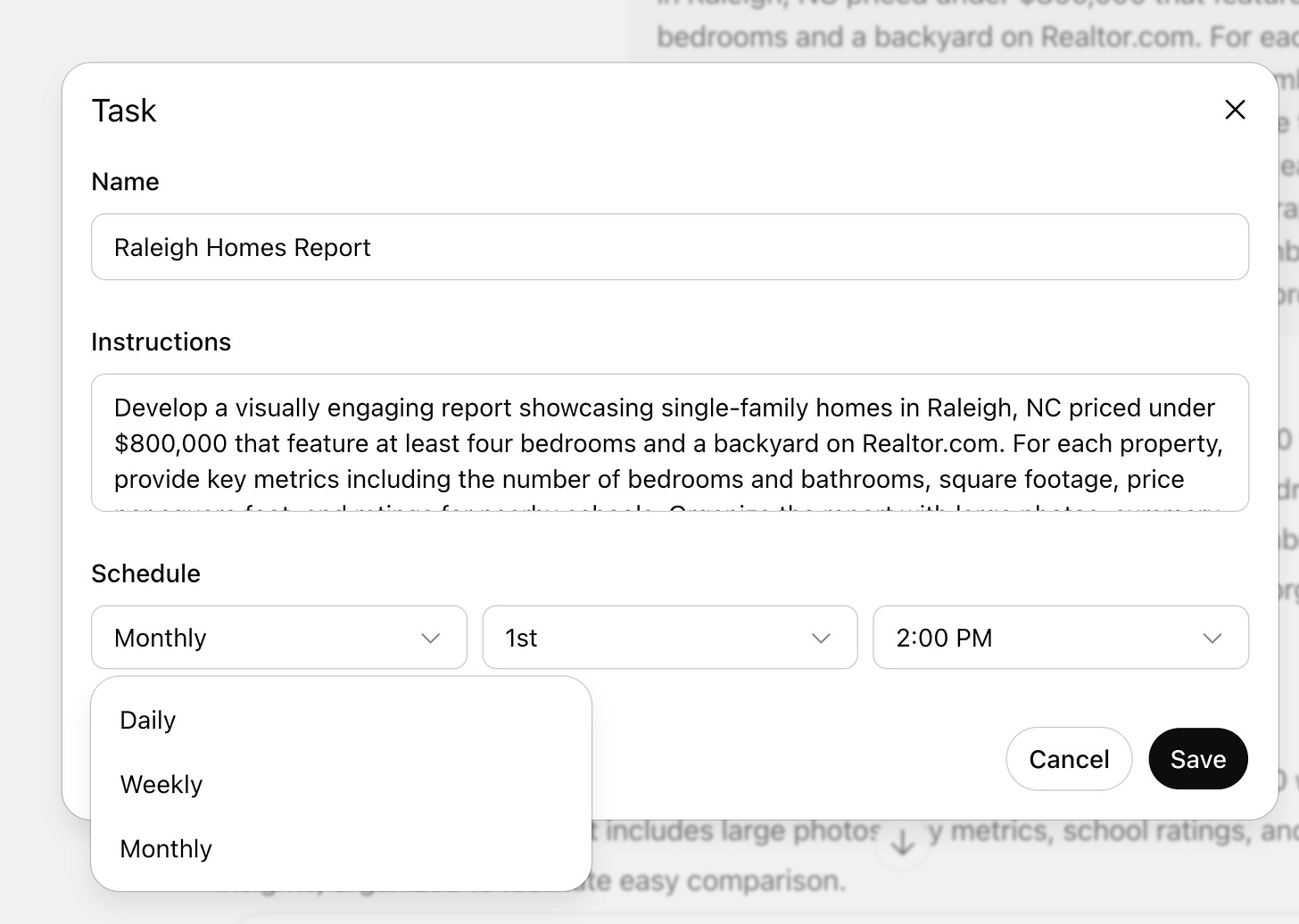

Web research and report drafting: Find a set of homes under $800K from Raleign, NC

Design and coding: Perform a redesign of Wikipedia in the styles of Airbnb and OpenAI websites, respectively.

Strategy and competitive research: Create a partnership strategy for Daytona, which is an AI sandbox platform, and create a slide deck version of it

This capability explosion follows from a core principle of AI agent design: capabilities are primarily constrained by two factors—how powerful the tools are, and how capable the orchestrating model is. (see my free guide to AI agents: “AI Agents Explained From Scratch”).

In reality, each of Agent Mode’s “tools” are AI agents themselves, making the entire feature an agent hierarchy.

Another key feature is Agent Mode’s ability to proactively ask you for confirmations, assistance, or logging into websites.

The interruption is bi-directional: the agent can also pause and ask you for confirmation before taking key actions like sending an email or updating a shared drive.

That’s why the “MacBook-equipped virtual employee” analogy works. You don’t just prompt it. You task it. You can interrupt mid-execution, change direction, or provide missing context.

That kind of two-way control makes it feel less like a bot and more like a collaborator. And because it can operate across tools and modalities, Agent Mode isn’t just executing steps—it’s orchestrating workflows. Your relationship with technology changes.

That’s the leap.

With Agent Mode, we also transcend the “Artifacts” UX. With Artifacts, you had to be constantly in the loop, steering the AI. This tight human in the loop experience has its place, but not ideal when you want to get a huge volume of very mundane work done.

Why This Changes AI Automations

Here’s the most underrated detail in the entire launch: Agent Mode can be scheduled.

That alone hints at where this is headed. We’re approaching a new phase of automation UX: instead of wiring up agentic workflows, you’ll just declare what you want done and when—and the agent will handle the orchestration in the background.

This doesn’t mean n8n or Zapier are obsolete. But the era of custom workflows just to generate or massage documents is ending fast.

The new way to build automations will be to:

State your entire process (SOP)

The ChatGPT agent breaks it down for you, and takes actions to the extent that it can take it, inside its VM

You interact with the agent over ChatGPT as well - unblocking the agent where you are needed for steering and guidance, or providing sensitive data, etc.

Imagine these workflows:

“At 9am every Monday, pull last week’s Shopify orders, summarize top-performing SKUs, and email me a formatted slide deck with graphs.”

“Every Friday, update the partnership_strategy_deck.pptx with the latest info from quarterly_business_review.docx, especially paying close attention to the appendix tables that have the latest MBR metrics.”

And so on.

That’s not a fantasy anymore, but already in present.

Now, since Agent Mode doesn’t have things like webhook support, extreme low latency, SLAs, and a host of enterprise requirements, etc, this mode of creating and consuming AI automations won’t eat all AI agents or automations.

But the point is: Agent Mode lowers the hurdle to create more personal automations, compared to the “imperative mode'“ of agent building.

The immediate impact? Prosumer workflows: solo operators, lean RevOps teams, AI-first freelancers. All those Airtable–n8n–zapier elbow-grease stacks are now vulnerable (although they are safe as long as ChatGPT doesn’t introduce webhooks).

Whether OpenAI pushes further into this space will depend on its feature velocity and appetite for workflow UX. But even in its current form, Agent Mode already makes parts of the AI automation stack feel redundant.

That said, AI automations as a discipline and skills required to build it will remain important, especially if you are an internal team building operations from within.

As with all things AI, the rate of adoption will be uneven and staggered. So while I don’t expect Vibe Automations to take over all basic automations right away, it will slowly and surely eat away use cases from AI workflows built imperatively.

Here’s a quick decision matrix on where AI automations are trending, based on your concerns.

The Technical Leap

Even if you set aside all the UX magic, this is a serious technical achievement.

What’s really impressive is that ChatGPT agents can run for almost an hour and stay consistently on task, which suggests that OpenAI unlocked a few additional breakthroughs since the February release of Deep Research, which demonstrated up to 30 minutes of agentic runtime.

To summarize the interesting technical bits:

The agent uses a custom agentic model trained with end-to-end RL, which is capable of running tasks up to more than 50 minutes (which I personally confirmed with this task). This compares to the agent behind DeepResearch, which rarely ran for longer than 30 minutes.

The custom model seems to be specifically solving for better PPT and Excel performance.

The latency and quality of web browsing seems to have at least 10x’ed from whatever was used in Operator, and it doesn’t get stuck as often when clicking on websites.

The improvement in Excel manipulation is perhaps the most striking, since it was an area that many experts (wrongly) claimed were out of reach for LLMs due to precision and reasoning limits.

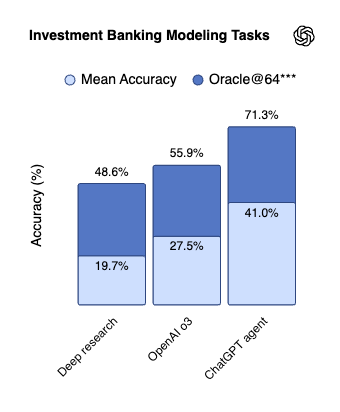

Below is a chart showing how Agent Mode achieved a step jump in junior IBD analyst tasks, compared to O3 (27.5% → 41% vs human level of 71%). Based on current trends, we’re on track for human-level spreadsheet proficiency within 12–18 months.

Of course, it’s nowhere near “production grade” in terms of producing a pixel-perfect 3 statement model for a LBO transaction, let alone pass an investment banking interview.

However, that’s the wrong way of looking at progress.

Agent Mode is already good at “compiling” a bunch of numerical data and producing a “initial starting point” sheet for an analyst to jump. That already is a time saver.

If Agent Mode were to be combined with Bloomberg, FactSet, or CapitalIQ MCP server, then it’s plausible that 90%+ of data gathering work done by knowledge management BPOs in India are at risk

My advice: you should be forking LibreOffice and create Cursor for Excel, since it’s inevitable.

The Bigger Strategic Implications

Agent Mode is a direct move toward making ChatGPT the control panel for work.

If you squint, it’s Cursor Agent for the average office worker, or anyone who manipulates data, creates documents, or runs recurring processes inside SaaS tools.

That includes marketing analysts, bizops folks, HR teams—pretty much every knowledge worker.

This puts OpenAI on a collision course with vertical AI startups. Because if Agent Mode can do 80% of what your AI-powered legal assistant or sales deck generator does—and it’s native inside ChatGPT—it becomes increasingly hard to justify paying for yet another point solution (note: OpenAI already had something similar to Agent Mode being piloted to select Enterprise customers).

Many vertical agents have been riding an arbitrage: packaging basic document workflows in a cleaner UI. But if ChatGPT lets users declaratively request those same outcomes, and does it with better infrastructure and tighter integration, the value prop starts to erode.

That’s where OpenAI’s strategy gets even sharper: it’s using integrations as the lock-in wedge. Want the agent to perform better? Give it access to your Notion, your Google Drive, your Salesforce.

The more data it sees, the more competent it becomes. That feedback loop—data → performance → usage → more data—is exactly how platform moats get built.

We’re already seeing similar moves from Anthropic, which recently launched “Anthropic Finance” with native integrations to data providers like FactSet. The pitch is simple: “Want your data to show up in agents? Partner now.”

OpenAI hasn’t formally announced the same thing—but make no mistake, that’s where this is headed.

Rough Edges and Timelines

After running about 20 Agent Mode runs, the same rules of prompting all apply to ChatGPT agents:

Agents can’t “guess” what you want, so you have to be specific about your desires, and add relevant details

Simultaneously, don’t be too prescriptive, lest the AI agent doggedly follows whatever you told it, and can’t unlock any creativity

Agents can’t do things that it doesn’t have the access to the data or the tools for,

That said, the real limitations mainly concern Agent Mode’s 1) latency, 2) performance , especially when building slides or creating spreadsheets.

While providing a “visual reference image” to the agent helped, I think there’s still a good 6 months - 1 year of time (purely my guess) for ChatGPT agent to one-shot a perfect powerpoint presentation. One shotting financial models may take longer (2 years?).

But the point is, this all seems inevitable.

What Could Derail OpenAI’s Agent Mode

The biggest risk is that Agent Mode’s “virtual computer” architecture hits a ceiling.

It's powerful, but maybe not the final form. The endgame is likely agentic, on-device control.

Where the agent runs as you, inside your computer, with full control over your real environment. Now, that will get more pushback, but it’s also an inevitable market that needs to be served. It requires stronger edge models, tighter security, and possibly a new device wave. This may take years due to hardware limitations, which means ChatGPT’s mode may persist for a few years.

Meanwhile, the biggest risk to OpenAI’s agent mode are simply other tech companies, especially CDN providers and publishers.

For example, Cloudflare has started blocking LLM scrapers, including OpenAIs.

Substack and Reddit blocked Operator, and are doing the same for Agent Mode. More sites will follow (in fact, it won’t even allow me to sign into Substack through Agent Mode).

Essentially, the Internet is fragmenting into a bunch of walled gardens, and that’s not good.

If authenticated browsing becomes brittle or legally fraught, many of Agent Mode’s use cases get neutered fast.

And then there’s the competitive timeline. Expect Claude and Gemini to ship their versions of Agent Mode within the next 90 days. Once that happens, it becomes a race to UX and vertical depth. Whoever nails orchestration, memory, and app control will own the next layer of computing.

Closing Thought

Agent Mode turns ChatGPT from a chatbot into a workspace.

It doesn’t just answer questions. It runs errands. Formats deliverables. Moves data across tools.

If you’re building agentic workflows or automating back-office tasks, take ChatGPT agents seriously.

The bar just rose. Expectations just shifted.

About Me

I write the "Enterprise AI Trends" newsletter, read by over 45K readers worldwide per month.

Previously, I was a Generative AI architect at AWS, an early PM at Alexa, and the Head of Volatility Index Trading at Morgan Stanley. I studied CS and Math at Stanford (BS, MS).