Recently, the Information reported that a 2 year old Legal AI startup called Harvey is raising $600m at $2b valuation, and using the proceeds mostly to acquire a 26 year old, PE-backed, legacy LegalTech company, vLex.

What’s interesting here isn’t the eye watering valuation target of Harvey (almost 100x ARR), but that Harvey's using the funds to buy a proprietary legal dataset and a customer base. With this deal, Harvey’s spending more money on M&A than it ever spent on “AI”, e.g. LLMs or engineers.

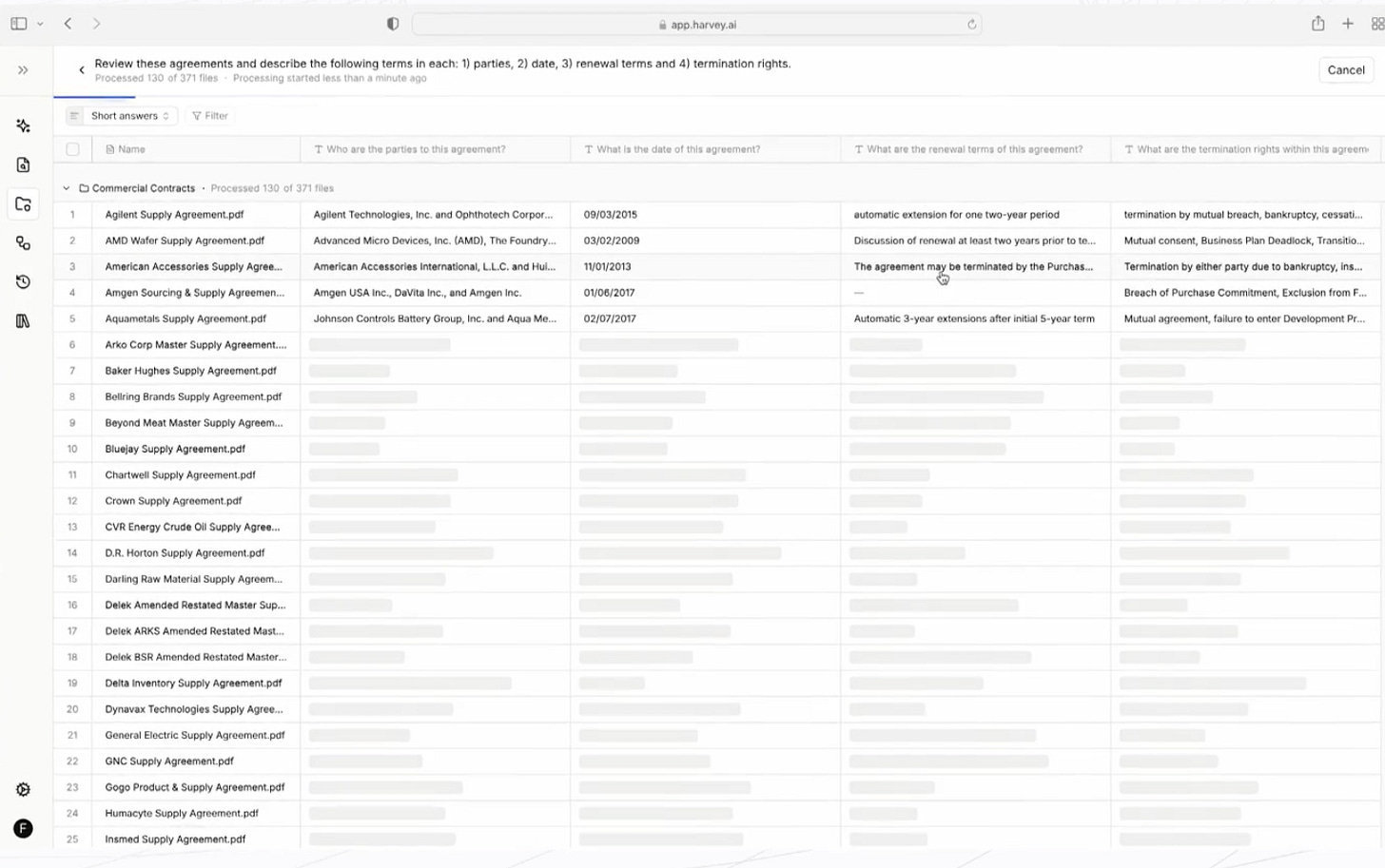

This news felt like an unintended admission by Harvey that its product-market-fit is tenuous, and acquiring a legacy platform (with its proprietary data) is necessary to the company’s growth. After all, Harvey’s a legal research platform at its core, and what good is a research platform that doesn’t have all the data?

In other words, proprietary data > AI features. It’s a testament to the idea that AI is more often a feature, not the product.

This news also signals moat-building in AI startups is starting to change in three ways: 1) that moats won’t be cheap, 2) more money will be spent on traditional moat-building mechanisms like buying data, buying growth, M&A, etc, and 3) not so much on “AI” on a relative basis, especially since the variable costs of “AI” are coming down.

After all, the core functionality of Harvey is legal research, which means having the depth and breadth of data is crucial. In a similar vein, every application-layer AI startup (derisively called ChatGPT wrappers) will need to figure out what they need to do to be defensible.

It also signals that having better UX and AI features compared to the incumbents is insufficient to win over more enterprise customers. In fact, almost all legacy legal research platforms have added basic semantic search and RAG features in the past 18 months, albeit not as polished as Harvey or other AI startups.

So what are the implications?