The Vibe Coding Bottleneck Problem

Why enterprises are structurally disadvantaged to adopt vibe coding, and what they can do about it

In this post, I’ll get to the absolute crux of why enterprises are at an enormous structural disadvantage versus startups when it comes to vibe coding, and what they can do about it.

Vibe coding accelerates just one part of software development cycle — feature development. This creates enormous bottlenecks both upstream and downstream of coding, such as security reviews, SRE, and even product management. To truly lift outputs, every part of software development needs to be elevated and re-imagined for the AI native era.

Vibe coding is going mainstream. Ryan Dahl (creator of NodeJS), Andrej Karpathy, and a growing number of graybeards have embraced spec-driven, vibe-first development. Enterprises are finally ready to adopt at scale.

But as execution speed increases, the software “supply chain” - from turning an idea into a PR, to reviewing it, securing it, shipping it, and operating it - is starting to break.

Most companies are framing this as a skills question: “What do we need to learn to move faster?” I think that’s the wrong frame. It’s not primarily a skill issue. It’s a supply chain and incentives issue.

In any production system, the final output is determined by the slowest stage. You can double capacity at one step and still see almost no improvement in finished output if the constraint moved elsewhere. The result looks like progress (more work-in-progress everywhere) while the actual thing you care about, i.e. shipped, reliable functionality, barely moves. “Work in progress” explodes.

This leads to a revelation: even if OpenAI or Anthropic “solves” coding, many organizations still won’t unlock the value—because their constraint won’t be coding anymore. It’ll be everything around coding.

You can see the shape of the problem by treating software delivery like a knowledge-work supply chain:

product & design (deciding what to build and what “good” looks like)

development (turning specs into code)

review & testing (quality, correctness, maintainability)

deployment (release mechanics, change management, rollback plans)

operations (reliability, monitoring, incident response, cost control)

Over the past decade, DevOps and microservices gave enterprises some real benefits, but also turned delivery into a distributed pipeline with many handoffs and many “humans in the loop” who own specific gates. That worked when coding was the slow part. It breaks when coding becomes fast.

Upstream, product managers and designers become the constraint: if you can implement ten times more than you can sensibly prioritize and specify, the backlog of “things we could build” turns into noise.

Downstream, reviewers and security teams become the constraint: if you can generate 150 PRs a week, but your org’s approval capacity is built for 20, work piles up. People route around the gate (“just merge it”), or they wait and the productivity gains evaporate.

The chip supply chain analogy is useful here. When AI chip demand exploded, the constraint wasn’t “making more GPUs” in the abstract. It was specific stages and components that didn’t scale at the same rate. You can ramp fab capacity, but if packaging capacity or HBM supply lags, finished systems don’t ship proportionally. The whole pipeline is paced by the slowest component.

Vibe coding creates the same dynamic: your shipping velocity is only as fast as the least-scaled stage in your delivery pipeline. And that stage is often a human approval process. AI increases “work creation.” It doesn’t automatically increase “output”.

In this post, I’ll walk through what that implies, and where the opportunities are.

Join 50K readers per month for the best thought leadership on enterprise AI.

DM me if you:

need advice about adopting vibe coding at your enterprise

any business inquiries for Enterprise AI Trends

Why Enterprises Will Struggle (And Startups Can’t Afford To)

Startups aren’t advantaged just because they’re smaller. They’re advantaged because they’re allowed to rapidly change the shape of the manufacturing process.

They don’t have the luxury of preserving legacy gates, legacy ownership boundaries, and legacy incentives.

Enterprises, by contrast, spent years building elaborate change management processes: review rituals, approval committees, compliance gates, separation of duties.

Ten years of Microservices and DevOps evangelism preached by Netflix and AWS did a number. It aggravated data and knowledge silos, for one. AI agents work best when the context is all aggregated into one place.

But having 500+ internal code bases with some dependency over network complicates things for AI agents, simply because they can’t easily gather all the requisite information. That’s one advantage of monorepos.

Now, elaborate release management processes and SOA (service oriented architecture) are fine in a slower world. But organizations also learn to live inside their bureaucracy. They build kitchens designed for many chefs, and then act surprised when cooking takes longer.

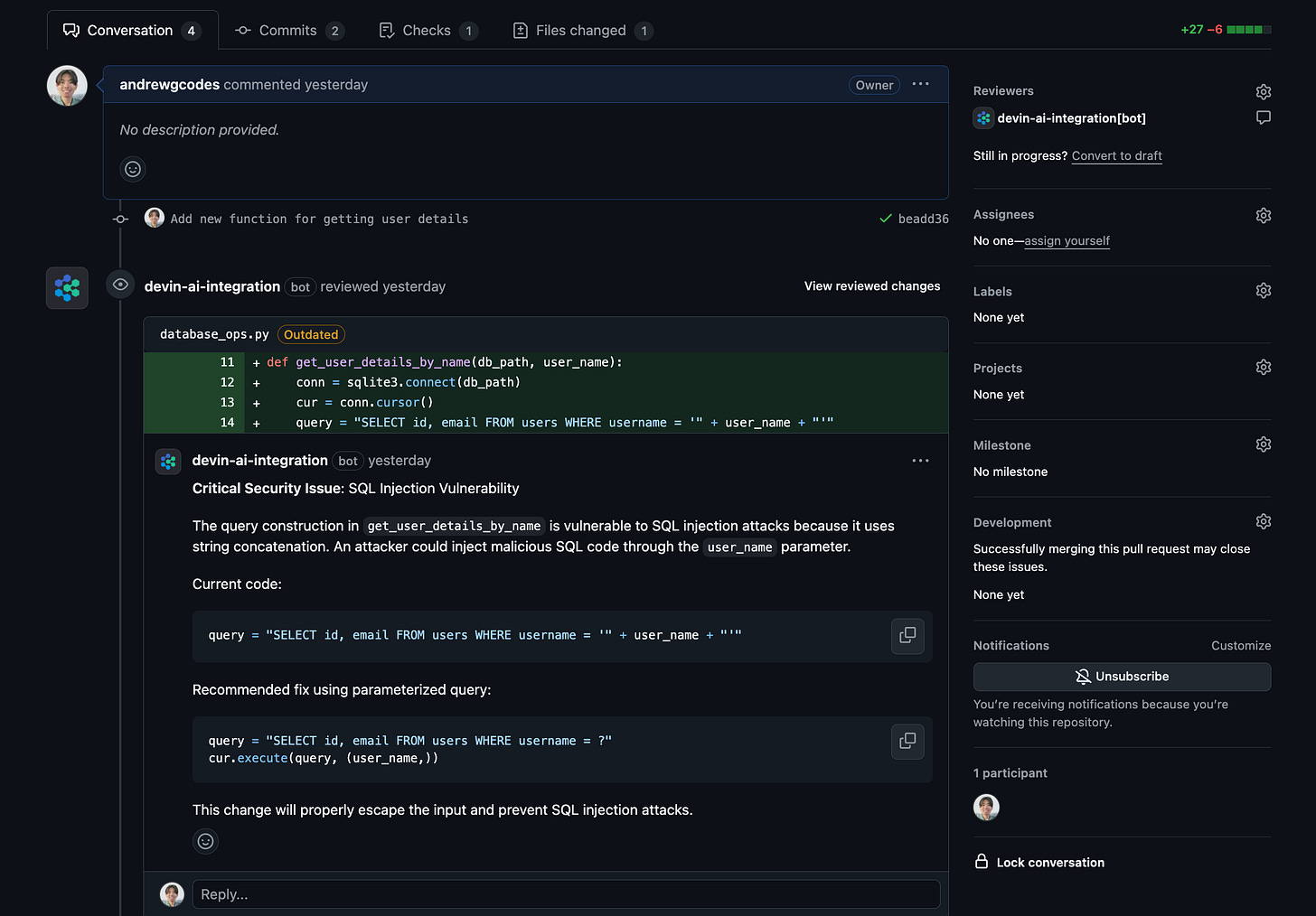

When vibe coding hits an enterprise without a corresponding redesign of the downstream system, the “sacred” parts become the constraint. Code review is a perfect example.

Code review is still fundamentally human-driven. It’s the same handful of senior engineers who could barely keep up with 20 PRs a week. Now they’re staring at 150. PRs pile up, queues grow, lead time explodes.

Developers either start merging without review (risk spikes), or they wait (and the productivity gain disappears). You end up with the worst of both worlds: more change and less control.

And code review is just the beginning.

Security is full of manual gates: threat modeling, exception approvals, dependency risk decisions, and compliance sign-offs that don’t compress just because code generation got cheaper.

Testing often still depends on human QA for edge cases, exploratory validation, and acceptance criteria that weren’t crisply specified. Deployment can still require approvals from multiple teams because “who gets paged at 2am” is a political question, not a technical one.

Then you get the organizational multipliers: siloed tools (security and dev working in different systems), incentives that reward blocking (“not my job”), and blame culture that treats bottlenecks as safety (“if it breaks, at least it wasn’t on me”). Cultural inertia becomes a real issue (“we’ve always done it this way”). All of this prevents vibe coding to even get bootstrapped.

So the real enterprise failure mode isn’t that they can’t adopt vibe coding. It’s that they adopt it, generate a flood of output, and then discover their organization can’t stomach any real change.

Food for thought: do they even want faster change?

At that point in the future, there are only two ways forward, assuming AI keeps progressing: redesign the org chart and processes (the gates, change incentives, collapse handoffs), or reduce the work-in-progress by reducing people and scope, i.e., the ugly path of “lightening the ship” through layoffs and consolidation.

Startups don’t have that option set. Bottlenecks are existential, not annoying. They can’t wait weeks for approvals. They can’t ship broken code because QA couldn’t keep up. They’re forced—by survival—to re-architect their delivery loop around speed, clarity, and tight feedback.

But do enterprises have the luxury of working at the enterprise cadence, especially going forward? That remains to be seen. My conversations with CTOs indicate that things are changing fast in 2026.

Which raises the real question: if “writing code” stops being the limiting factor, what becomes the new scarce resource—and what do you rebuild first so the whole system can actually go faster?

Paid tier readers will have access to my tactical market commentary focusing on the biggest shifts in AI (like this), as well as my paid post archive. Support this publication by joining paid tier. Join hundreds in our community.

The TAM Explosion for DevSecOps Automation

One consequence of this bottleneck problem is that there will be an explosive need to automate everything upstream and downstream of vibe coding.

By necessity, every mature company adopting vibe coding needs to automate review, security scanning, testing, and ops to avoid the bottleneck problem. Again, your processes move only as fast as your least automated bottleneck.

This will lead to an explosion in the TAM for better tools and services around DevSecOps. The demand will grow in lock-step with the progress in agentic models. The better the foundational models become, the greater the demand for process automation.

Interestingly, the TAM for pipeline automation tools scales with code volume, not headcount.

That’s a fundamentally different growth trajectory than traditional dev tools SaaS, which scale with team size.

In anticipation of this demand, the labs and the leading startups (e.g. Cursor, Cognition) have recently ramped up its offerings in DevSecOps. For example:

OpenAI keeps investing into security agents for Codex because they know security bottlenecks block enterprise adoption. You can’t sell an enterprise CTO on “generate code 10x faster” if the response is “great, now I have 10x more code to security review and I don’t have the team for it.”

Cursor acquired Greptile and launched Bugbot because they’re vertically integrating the toolchain. Greptile helps with codebase understanding and search—critical when you’re reviewing AI-generated code that touches unfamiliar parts of the stack. Bugbot automates debugging. They’re building the full pipeline: generate code, understand it, debug it, ship it. They can’t sell “10x code generation” if that code can’t ship safely.

On a longer horizon, Cursor seems to be going for vertical integration of all parts of software development supply chain.

In response, Cognition has also recently released an incremental upgrade to its code review offering.

In any case, the market map for “automating non-programming tasks” is taking shape. However, the adoption of “vibe coding adjecent” tools has still been incredibly slow in enterprise, mainly because of inertia. That’s why Cursor and Cognition - which are leaning more B2B and enterprise recently - are actively working on helping customers in this space.

In any case, labs like Anthropic and OpenAI will capture most value by bundling basic automation into their coding tools. Claude Code already ships with security review and code review agents.

However, the TAM is so large that there’s room for nimble startups to provide best in class offerings in all areas of the software supply chain, and be successful. I share my thoughts on this later in this post.

Some Tactical Advice for Enterprises

For companies that want greater feature velocity, start investing in automation right now, both upstream and downstream to vibe coding.

For small startups, they have the advantage of being unburdened by reputation risk and having large, heavy org charts and processes.

For the leading AI labs - which still retain much of startups’ advantages - they can vibe code solutions at breakneck speeds also. That’s why Anthropic shipped Claude Cowork in 10 days, which was 100% vibe coded.

But what about traditional enterprise? They are currently caught in a limbo where they are staring at a big pile of outdated processes, cultural issues, and technologies that are keeping them from shipping faster. The blockers are almost all unrelated to the performance of agentic AI models.

So here’s what I’d advise enterprises. Don’t do sweeping org changes yet, but focus on these three priorities to get the ball rolling and score quick wins.

Priority 1: Improve code review agent performance by investing heavily in documentation

Needless to say, code reviews need to be streamlined, but with a catch. Humans in the loop are necessary for approving the important PRs. 100% automation of code reviews is not desirable or possible, because human developers need to retain an understanding of the code base.

But that doesn’t mean the reviewer needs to read every line of code written by AI. AI can make code reviews more pleasant by providing better UXs, summarizations, etc, so that not every line of code needs to be eyeballed. There’s been a ton of new products launched just to streamline code review - take advantage of them.

Now, for code review agents to be effective, your team has to put in the work to teach AI agents about your company/domain specific preferences. That’s where the real work is - training the AI.

Out of the box, AI agents won’t understand your company-specific terms, like what AbstractCQASequencerController means (I made that up). One effective approach is to leverage LLMs to generate better documentation for obscure business logic, which makes code review AI agents more effective.

What always works - providing AI agents with more context via markdown files inside code repositories. You need to invest some effort upfront to write clear documentation that AI agents can read (into the context). These docs could be about your internal APIs, preferred architectural patterns, security constraints, etc.

Now, the prospect of writing docs makes many developers’ heads explode, but the good news is that Claude Code is great at generating these documents. Use it.

Unfortunately - in my experience - most enterprises really struggle with documenting even the most basic things about how they ship code. A lot of that knowledge sits in people’s heads (tribal knowledge).

That’s another reason why enterprises are bound to move slower with vibe coding, unless they fix cultural issues. Every CTO should focus on documenting tribal knowledge such that AI agents can move more effectively.

In terms of which vendor to use (Codex, Claude, Cursor, Devin, etc) - my advice is to just pick one and get started. My preference is just using Claude Code if you are a small team. For larger enterprises, maybe you need the collaboration features baked into Cursor or Devin.

Examples of documents to create and maintain to empower your code review (and coding agents):

Internal style guides and architectural diagrams

Your specific security requirements (e.g., “all database queries must use parameterized statements,” “all API calls must have rate limiting policy X attached”), etc.

Priority 2: Streamline the downstream processes in LOCKSTEP with vibe coding

You need to start investing in DevSecOps automation from Day 1.

Most companies want to adopt Cursor or Copilot at scale first, then figure out the rest. That’s only going to lead to piles of PRs and fatigue for the senior developers doing code reviews.

Instead, don’t scale code generation without streamlining security scanning, testing, and deployment. You need to manage the load at every step of the process, and unblock blockages.

Of course, since technology is moving fast, whatever you build may get obsolete in 3 months. But that shouldn’t deter you from getting started, since it’s an opportunity to learn.

Instead, iterate on your processes (Kaizen method), and unblock whatever nodes in the process that’s constrained. Start by automating more coding. Then, automate review, security, and testing. Then increase code generation velocity again. Then update your automated test suite. Rinse and repeat.

At the minimum:

Set up basic security scanning agents that runs on every PR, which already perform better than most human developers at flagging security issues. Both Anthropic and OpenAI offer

Agentsthat perform extensive security reviews on your PRs. You can then layer on your company and domain specific policies on that. Start there and see how it performs instead of throwing your hands up.Leverage agents to completely automate creating and execute test suites prior to creating PRs. Have agents periodically scan your code base to increase test coverage. You can literally just ask Claude to do just that.

Automate big chunks of browser based QA with LLMs. Yes, LLMs can use browsers and perform QA now. You shouldn’t be relying human QA team members for performing basic end to end tests.

Set up agentic workflows that assist with incident response so you can catch issues in production and triage faster. Agents are faster than most human developers at performing root cause analysis. Leverage that.

The pipeline needs to handle the volume before you increase the volume. If you need specific advice on how to create these automations, or which vendors to leverage, DM me.

Priority 3: Consider using managed services for things like security ops

There are some areas where it’s wiser to buy, versus building in-house solutions. One of them is all aspects of security (security review, threat detection, sec ops, etc).

One side effect of vibe coding is that it has made cyber security infinitely more challenging. Smart AI agents cause a paradigm shift in security because, unlike humans:

agents can adapt in real time, autonomously, to the target’s security defenses

agents are much cheaper to run than hiring human hackers

agents don’t fatigue, they run 24/7

it’s been known that agents are already becoming better at humans when it comes to hacking

Defending agains agentic cyber security threats is not something you should own. The area is evolving fast. You want these off your plate completely, not another thing to maintain.

That said, I don’t think traditional vendors like Crowdstrike or Okta are well equipped to provide solutions for this as well. They are too fragmented, solving different parts of the security problem (device management versus identity.. but they should all be integrated).

I would lean more on working directly with OpenAI and Anthropic for guidance on how to handle security reviews and cybersecurity.

If you are an institution or corporate, we offer group subscription (get 15% off for 3+ seats). I’ll remember you fondly, and you will build good karma.

Quick Landscape: What’s Out There

This is not an endorsement of any company in particular, but a small subset of vendors you can check out.

Code review: Obviously the massive painpoint right now, and a lot of innovation is happening. CodeRabbit, Graphite exist, as well as Devin, SourceGraph, etc. Everyone’s putting out a code review offering, but none of them seems like the “clear winner”.

Security reviews, security ops: The labs (OpenAI, Anthropic) will bundle security review capability into coding agents, and I expect them to offer products as well. But specialized vendors can still add value. Snyk, Semgrep, etc, offer AI powered automated vulnerability detection. There’s also an overlap with the AI native cybersecurity market, which has companies like Abnormal (email security), Legion (sec ops), etc.

Testing/QA: Out of the box, coding agents are already really good at generating tests, and managing test suites. QA Wolf, Mabl, Testim, Mometic handle automated UI and regression testing. Less incumbent dominance here than other categories. More greenfield opportunity.

Observability and Incident Response: Datadog, Honeycomb dominate runtime monitoring and incident response - and they have AI agent features that automate parts of SRE tasks. Traversal is just one of many startups that are trying to sell a full blown AI agent in this market.