What would a $2,000-a-month ChatGPT look like?

The future of AI application pricing will be bimodal

The idea of paying $2,000 per month for an AI chatbot might seem extreme, even absurd, to the average person. But not only is this market real—it’s likely massive.

A trivial way to create a $2,000-a-month AI product is to wrap a $24,000-a-year dataset with a chat interface. But DeepResearch proved that making best-in-class agents is another recipe for creating “luxury” AI products.

In fact, “high end” knowledge workers may be the most profitable segment for AI companies. They are educated, motivated, and wealthy buyers working in competitive domains, where a small edge in intelligence can be worth millions (e.g., drug discovery, financial trading, tax law, medicine, etc). Here, the demand for additional IQ is inelastic. It is also easy to tap into their deep competitive drives, insecurities, and status anxieties.

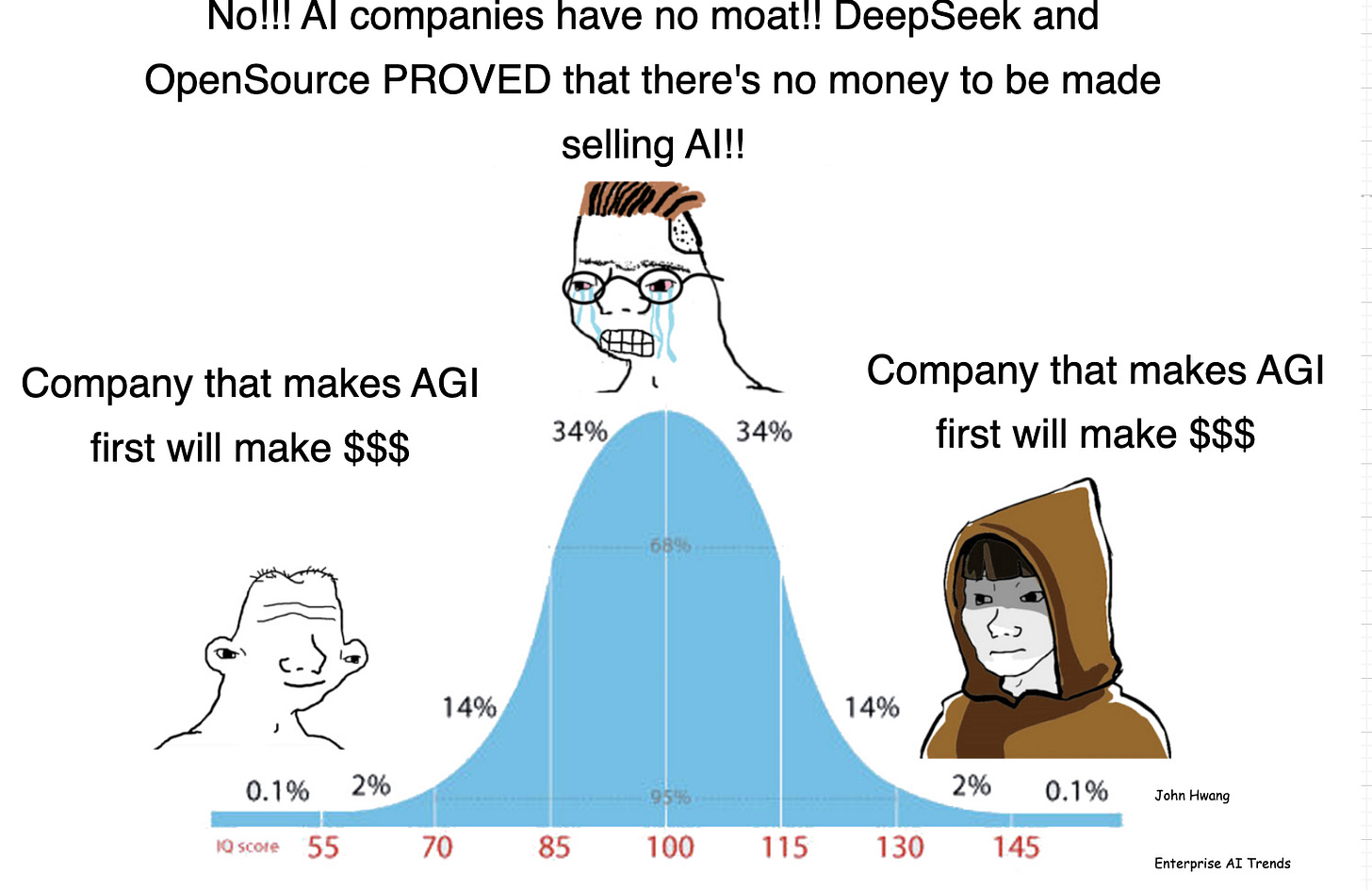

This goes against the narrative that chatbots have no moat, which gained traction after DeepSeek’s release. For “high-value” knowledge work, cost optimization is not the end goal. Cost is maybe 3rd or 4th in purchasing criteria when the software is critical for staying in business or crushing competition. Case in point: Bloomberg (finance, $20K+ a year), CoStar (commercial real estate, $15k+ a year), SAS (statistical modeling, $2K a year), and Westlaw are still alive and thriving.

So in this post, we will discuss the following:

AI software pricing will go bimodal—starting this year. AI apps will split into ‘mass consumer’ AI and ‘high-end, enterprise-grade’ AI, with little to no middle ground. This high-end software will take two main forms: vertical enterprise AI and premium usage-based AI.

Vertical enterprise AI. The most successful enterprise AI products will be powered by domain-specific models, fine-tuned on top of the best frontier models from OpenAI, Anthropic, Google, etc. Winning in this space requires immense capital, deep industry expertise, and access to proprietary data—barriers that most startups simply can’t overcome.

Premium usage based AI. Some AI products will maximize revenue by delivering exceptional, one-off outputs with an usage based pricing. Take ChatGPT’s Deep Research: if it charged per report, it could easily make $2K per month from power users—a price consultants command for similar deliverables per report. In this model, AI is not just software—it’s a high-value service.

As AI software market splits into “high end” and “mass market”, the middle will get killed. From beneath, ChatGPT, Claude, etc will keep bundling up capabilities, and eat into specialized UIs. From the top, "high end” competitors will run away with better models or proprietary data. So at the end of this post, I will share some ideas on how startups can avoid this fate.

So how do we build these “high end” AI products? Let’s explore the above ideas further.

The Bi-Modal Future of AI Pricing

As dust settles in AI application software, pricing will go bimodal: low-end, high-end, and little in between. This structure isn’t new; it mirrors what has existed in finance, intelligence, and specialized enterprise software for decades.

Consider the financial software market. The Bloomberg Terminal—the $2X,000-per-year-per-person product—still dominates the financial terminal market at the high end. On the content side, there are $2,000-a-month buy-side newsletters. At the same time, you have $30-a-month subscriptions to websites like Seeking Alpha, Finviz, etc. There are only a few success stories in the “mid-tier” category.

Similarly, in AI, the low-end has been established by ChatGPT, Claude, and others, with a very generous free tier. But the high-end will start forming fast—beginning this year—led by OpenAI and Anthropic’s push into enterprise AI and prosumers. (I already wrote about OpenAI’s enterprise AI strategy here).

Essentially, OpenAI and Anthropic will (and is) offering task-specific, finetuned models as part of their go-to-market strategy. These models are then wrapped into vertical AI agents, which can be sold at enterprise prices. For example, OpenAI has recently building up its healthcare practice. The flip side is that the mass market will never see these advanced models, creating a divide amongst people in terms of access to AI models.

So what’s the fundamental force that allows AI companies to command higher prices?